Installing and will report back - will this be sufficient to test? I also have the Kibana Pro plugin.

nothing changed on Kibana’s side, so I think it will

Yep can confirm this is was an ES issue, you can go ahead and update the ES plugin alone.

Thanks, installed on my test cluster and the new cluster where this has been an issue and letting it run.

I’d say it’s looking good. Installed yesterday on two clusters with lower use and logged into both this morning successfully! Thank you!

Great! Thanks for reporting the issue and tests!

Good news:

I can log into the Kibana dashboards with LDAP credentials without having to do anything funky.

Bad news:

I applied the 1.19.5_pre6 to another cluster and elasticsearch completely stopped receiving logs. Investigating, the logstash systems sending output to the cluster were logging 403s on bulk actions:

[2020-04-22T13:29:10,889][ERROR][logstash.outputs.elasticsearch][main] Encountered a retryable error. Will Retry with exponential backoff {:code=>403, :url=>"https://es2-0...:9200/_bulk"}

Confirmed that the logstash user/pass in ROR were working correctly and that I could connect to the elasticsearch nodes with a curl from the logstash boxes. Was at a complete loss (no errors in elasticsearch logs) until I stopped the cluster, removed the 1.19.5_pre6 ror from all the nodes, then reinstalled 1.18.9 and restarted the cluster.

Now I’m not getting the 403 errors on the bulk updates.

So as nice as the good news is, the bad news is much worse. Having to revert this change on all my clusters where it’s applied.

I’ve reverted from 1.19.5_pre6 on all my clusters due to the above issues.

Chris, can you look in the es logs, and try to find a log line with “FORBIDDEN” written in it corresponding to when you hsd those 403 errors in logstash?

@cmh could you please test this one:

I’ve done one more improvement related to thread pool for LDAP and maybe this is the issue. If not, we need to more info and find any grip to start with.

@coutoPL looks like an acl issue if it’s responding 403 though. No?

between 1.18.9 and current pre version was a lot of changes, so right - this issue doesn’t have to be strictly correlated with LDAP issue from the beginning of this thread

That was the thing that made me take longer to find the issue - there was nothing in the logs to make me think it was ROR causing the 403, and since I had verified via curl with no issues, I thought that ruled it out. It wasn’t until I was looking at how the logs precipitously stopped right around when I had upgraded to the pre6.

I’ll try the pre8 on the one cluster that mostly nobody (but one particularly vociferous dev) looks at and will report back.

Starting from last of the newest (pre) versions of ROR our core is much more secure. Before there was rather a strategy to pass request even if found indices cannot be altered. Now at the end of handling, during indices altering, ROR can forbid a request (from a security reasons).

This should not happen on a daily basics, but rather indicates some bug in ROR. So, maybe at the moment we’re talking about sth like this. So, it’d be nice if you could provide your config (or at least block which should match) and a curl of the request. I will try to reproduce it in test.

Obviously there should be some error logs which tells us that sth goes wrong. If there is not, I’ll add one after I find what is wrong in this case.

I’ve installed pre8 on the cluster that I discovered the issue on and have been reloading diligently in the past 30 minutes, and so far it still seems to be logging, so it looks like whatever broke in pre6 might be fixed in pre8 - do you still want me to post configs?

I’ve already let folks know we’re running pre8 on that cluster - the others have been reverted - and won’t change any of the others until this has behaved until at least tomorrow morning.

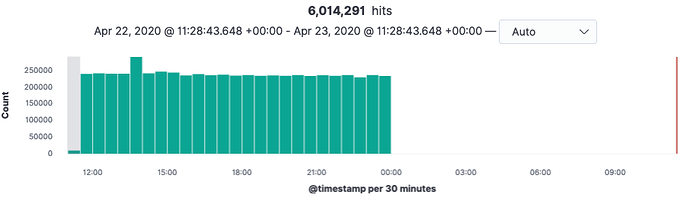

No luck. Carefully monitored after making the change and was hopeful. Notified folks of the upgrade and signed off. Checked this morning and discovered it stopped logging at midnight GMT which is when new indices would be created, so at least now we know what is causing the failure - new index creation.

Here’s our current ROR config on this cluster:

---

# yamllint disable rule:line-length

# THIS FILE IS PROVISIONED BY PUPPET

# However, once it gets loaded into the .readonlyrest index,

# you might need to use an admin account to log into Kibana

# and choose "Load default" from the "ReadonlyREST" tab.

# Alternately, you can use the "update-ror" script in ~cheerschap/bin/

readonlyrest:

enable: true

prompt_for_basic_auth: false

response_if_req_forbidden: Forbidden by ReadonlyREST plugin

ssl:

enable: true

keystore_file: "elasticsearch.jks"

keystore_pass: "redacted"

key_pass: "redacted"

access_control_rules:

# LOCAL: Kibana admin account

- name: "local-admin"

auth_key_unix: "admin:redacted"

kibana_access: admin

# LOCAL: Logstash servers inbound access

- name: "local-logstash"

auth_key_unix: "logstash:redacted"

# Local accounts for routine access should have less verbisity

# to keep the amount of logfile noise down

verbosity: error

# LOCAL: Kibana server

- name: "local-kibana"

auth_key_unix: "kibana:redacted"

verbosity: error

# LOCAL: Puppet communication

- name: "local-puppet"

auth_key_unix: "puppet:redacted"

verbosity: error

# LOCAL: Jenkins communication

- name: "local-jenkins"

auth_key_unix: "jenkins:redacted"

verbosity: error

# LOCAL: Elastalert

- name: "elastalert"

auth_key_unix: "elastalert:redacted"

verbosity: error

# LOCAL: fluentbit

- name: "fluentbit"

auth_key_unix: "fluentbit:redacted"

verbosity: error

# LDAP: Linux admins and extra kibana-admin group

- name: "ldap-admin"

kibana_access: admin

ldap_auth:

name: "ldap1"

groups: ["prod_admins","kibana-admin"]

type: allow

# LDAP for everyone else

- name: "ldap-all"

# possibly include: "kibana:dev_tools",

kibana_hide_apps: ["readonlyrest_kbn", "timelion", "kibana:management", "apm", "infra:home", "infra:logs"]

ldap_auth:

name: "ldap1"

groups: ["kibana-admin", "admins", "prod-admins", "devqa", "development", "ipausers"]

type: allow

# Allow localhost

- name: "localhost"

hosts: [127.0.0.1]

verbosity: error

# Define the LDAP connection

ldaps:

- name: ldap1

hosts: ["ldap1", "ldap2"]

ha: "FAILOVER"

port: 636

bind_dn: "uid=xx,cn=xx,cn=xx,dc=xx,dc=xx,dc=xx"

bind_password: "redacted"

ssl_enabled: true

ssl_trust_all_certs: true

search_user_base_DN: "cn=users,cn=accounts,dc=m0,dc=sysint,dc=local"

search_groups_base_DN: "cn=groups,cn=accounts,dc=m0,dc=sysint,dc=local"

user_id_attribute: "uid"

unique_member_attribute: "member"

connection_pool_size: 10

connection_timeout_in_sec: 30

request_timeout_in_sec: 30

cache_ttl_in_sec: 60

group_search_filter: "(objectclass=top)"

group_name_attribute: "cn"

The logstash systems use (appropriately enough) the “logstash” user for auth and as you can see I have verbosity set to “error” because otherwise the logs get VERY noisy with the constant auth. Elasticsearch logs are hard enough to parse as it is.

Let me know what else I can provide.

Your settings look ok to me, and I’m surpriesed you don’t have any anomalies in the ES logs (coming from ROR or not). @coutoPL WDYT?

@cmh you know, I don’t fully understand your infrastructure, so I don’t feel that I understand what kind of failure we talking about. ROR doesn’t work at all? Is there any crash maybe? Are you able to access your cluster, through ROR eg using curl?

Quick overview of the infrastructure:

- Filebeat running on the systems, mostly 1.3.1 on prod systems but migrating to 7.6.2 on newer systems. These systems send logs to logstash.

- A pair of Logstash servers in each instance running 7.6.2. They hand off to the elasticsearch cluster using credentials and SSL configured in RoR

- Elasticsearch cluster locked to 7.5.0 at the moment, at least 4 nodes but bigger clusters have more. Indices are grouped by app and date coded, for example

syslog-2020.04.23 - Kibana for the UI running RoR pro kibana plugin, also locked to 7.5.0

RoR 1.18.9 works as expected but we have the LDAP timeout issue where after LDAP auth isn’t used for a period of time, the connection gets dropped and won’t reconnect until the RoR config is changed which gets it to reconnect.

Using these 1.19.5_pre versions, the LDAP auth is certainly fixed, and logging/auth seems to work until we cross the midnight GMT time when new indices would be created at which point logstash starts seeing the 403 errors mentioned above. Reverting to 1.18.9 fixes that issue. What is interesting is that when the bulk updates are getting the 403 error, I’m able to auth and run basic API commands using the logstash local account.

Please let me know if I can provide extra information or clarification.

@cmh great explained. So, I expect (I said that before and know I’m pretty sure this is it) that this issue is not strictly connected to previous LDAP issue and 1.19.5-pre6 version, but one of our changes between 1.18.9 and this one.

I suspect there is sth wrong with _bulk request handling. I write proper integration test for it today and later let you know if I found sth.

Thanks!