Hello once again,

I just wondering if there is anyy text/size limit for ROR setting in GUI Kibana, beause aj want to add user and i can not save seetings. I have 1200 rows.

Hello once again,

I just wondering if there is anyy text/size limit for ROR setting in GUI Kibana, beause aj want to add user and i can not save seetings. I have 1200 rows.

Could you please let us know what error you see when you try to save your settings? Do you see anything related to this issue in the ES logs?

I’ve tried to reproduce it and I’ve created 5000+ lines of ROR settings file and I don’t see any problems. You can check it here: [RORDEV-1305] reproduction attempt by coutoPL · Pull Request #51 · beshu-tech/ror-sandbox · GitHub

According to ROR settings limits - indeed, there is one. The file max size is 3MB (the default, it can be changed if needed). It’s related to the Billion laughs attack and it’s the size of a file after expanding (so when you use YAML reference macros the file can be not that long, but the limit can be reached)

Hello,

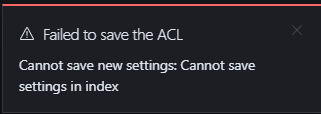

This is only information i have :

In ES logs i can see .

[2024-10-17T09:28:12,046][ERROR][t.b.r.e.s.EsIndexJsonContentService] [esmaster01] Cannot write to document [.readonlyrest ID=1]

org.elasticsearch.index.mapper.DocumentParsingException: [1:13] failed to parse field [settings] of type [keyword] in document with id '1'. Preview of field's value: 'readonlyrest:

And also :

group_name_attribute: "cn"'

at org.elasticsearch.index.mapper.FieldMapper.rethrowAsDocumentParsingException(FieldMapper.java:234) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.index.mapper.FieldMapper.parse(FieldMapper.java:187) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.index.mapper.DocumentParser.parseObjectOrField(DocumentParser.java:450) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.index.mapper.DocumentParser.parseValue(DocumentParser.java:775) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.index.mapper.DocumentParser.innerParseObject(DocumentParser.java:368) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.index.mapper.DocumentParser.parseObjectOrNested(DocumentParser.java:319) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.index.mapper.DocumentParser.internalParseDocument(DocumentParser.java:143) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.index.mapper.DocumentParser.parseDocument(DocumentParser.java:88) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.index.mapper.DocumentMapper.parse(DocumentMapper.java:112) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.index.shard.IndexShard.prepareIndex(IndexShard.java:1038) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.index.shard.IndexShard.applyIndexOperation(IndexShard.java:979) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.index.shard.IndexShard.applyIndexOperationOnPrimary(IndexShard.java:923) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.action.bulk.TransportShardBulkAction.executeBulkItemRequest(TransportShardBulkAction.java:376) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.action.bulk.TransportShardBulkAction$2.doRun(TransportShardBulkAction.java:234) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.common.util.concurrent.AbstractRunnable.run(AbstractRunnable.java:26) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.action.bulk.TransportShardBulkAction.performOnPrimary(TransportShardBulkAction.java:302) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.action.bulk.TransportShardBulkAction.dispatchedShardOperationOnPrimary(TransportShardBulkAction.java:150) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.action.bulk.TransportShardBulkAction.dispatchedShardOperationOnPrimary(TransportShardBulkAction.java:78) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.action.support.replication.TransportWriteAction$1.doRun(TransportWriteAction.java:217) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.common.util.concurrent.AbstractRunnable.run(AbstractRunnable.java:26) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.common.util.concurrent.TimedRunnable.doRun(TimedRunnable.java:33) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingAbstractRunnable.doRun(ThreadContext.java:984) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.common.util.concurrent.AbstractRunnable.run(AbstractRunnable.java:26) ~[elasticsearch-8.15.2.jar:?]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1144) ~[?:?]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:642) ~[?:?]

at java.lang.Thread.run(Thread.java:1570) ~[?:?]

at map @ tech.beshu.ror.es.services.EsIndexJsonContentService.saveContent(EsIndexJsonContentService.scala:108) ~[?:?]

at map @ tech.beshu.ror.configuration.index.IndexConfigManager.save(IndexConfigManager.scala:63) ~[?:?]

at map @ tech.beshu.ror.boot.engines.MainConfigBasedReloadableEngine.saveConfig(MainConfigBasedReloadableEngine.scala:124) ~[?:?]

at map @ tech.beshu.ror.configuration.ReadonlyRestEsConfig$.load$$anonfun$1$$anonfun$1(ReadonlyRestEsConfig.scala:36) ~[?:?]

at map @ tech.beshu.ror.configuration.ReadonlyRestEsConfig$.load$$anonfun$1$$anonfun$1(ReadonlyRestEsConfig.scala:36) ~[?:?]

at sequence @ tech.beshu.ror.accesscontrol.utils.AsyncDecoderCreator$$anon$5.apply(ADecoder.scala:183) ~[?:?]

at sequence @ tech.beshu.ror.accesscontrol.blocks.definitions.ldap.implementations.UnboundidLdapConnectionPoolProvider$.resolveHostnames(UnboundidLdapConnectionPoolProvider.scala:189) ~[?:?]

at sequence @ tech.beshu.ror.accesscontrol.blocks.definitions.ldap.implementations.UnboundidLdapConnectionPoolProvider$.resolveHostnames(UnboundidLdapConnectionPoolProvider.scala:189) ~[?:?]

at sequence @ tech.beshu.ror.accesscontrol.blocks.definitions.ldap.implementations.UnboundidLdapConnectionPoolProvider$.resolveHostnames(UnboundidLdapConnectionPoolProvider.scala:189) ~[?:?]

at sequence @ tech.beshu.ror.accesscontrol.blocks.definitions.ldap.implementations.UnboundidLdapConnectionPoolProvider$.resolveHostnames(UnboundidLdapConnectionPoolProvider.scala:189) ~[?:?]

at sequence @ tech.beshu.ror.accesscontrol.blocks.definitions.ldap.implementations.UnboundidLdapConnectionPoolProvider$.resolveHostnames(UnboundidLdapConnectionPoolProvider.scala:189) ~[?:?]

Caused by: java.lang.IllegalArgumentException: Document contains at least one immense term in field="settings" (whose UTF8 encoding is longer than the max length 32766), all of which were skipped. Please correct the analyzer to not produce such terms. The prefix of the first immense term is: '[114, 101, 97, 100, 111, 110, 108, 121, 114, 101, 115, 116, 58, 13, 10, 13, 10, 32, 32, 32, 32, 97, 117, 100, 105, 116, 95, 99, 111, 108]...'

at org.elasticsearch.index.mapper.KeywordFieldMapper.indexValue(KeywordFieldMapper.java:952) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.index.mapper.KeywordFieldMapper.parseCreateField(KeywordFieldMapper.java:895) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.index.mapper.FieldMapper.parse(FieldMapper.java:185) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.index.mapper.DocumentParser.parseObjectOrField(DocumentParser.java:450) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.index.mapper.DocumentParser.parseValue(DocumentParser.java:775) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.index.mapper.DocumentParser.innerParseObject(DocumentParser.java:368) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.index.mapper.DocumentParser.parseObjectOrNested(DocumentParser.java:319) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.index.mapper.DocumentParser.internalParseDocument(DocumentParser.java:143) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.index.mapper.DocumentParser.parseDocument(DocumentParser.java:88) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.index.mapper.DocumentMapper.parse(DocumentMapper.java:112) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.index.shard.IndexShard.prepareIndex(IndexShard.java:1038) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.index.shard.IndexShard.applyIndexOperation(IndexShard.java:979) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.index.shard.IndexShard.applyIndexOperationOnPrimary(IndexShard.java:923) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.action.bulk.TransportShardBulkAction.executeBulkItemRequest(TransportShardBulkAction.java:376) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.action.bulk.TransportShardBulkAction$2.doRun(TransportShardBulkAction.java:234) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.common.util.concurrent.AbstractRunnable.run(AbstractRunnable.java:26) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.action.bulk.TransportShardBulkAction.performOnPrimary(TransportShardBulkAction.java:302) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.action.bulk.TransportShardBulkAction.dispatchedShardOperationOnPrimary(TransportShardBulkAction.java:150) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.action.bulk.TransportShardBulkAction.dispatchedShardOperationOnPrimary(TransportShardBulkAction.java:78) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.action.support.replication.TransportWriteAction$1.doRun(TransportWriteAction.java:217) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.common.util.concurrent.AbstractRunnable.run(AbstractRunnable.java:26) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.common.util.concurrent.TimedRunnable.doRun(TimedRunnable.java:33) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingAbstractRunnable.doRun(ThreadContext.java:984) ~[elasticsearch-8.15.2.jar:?]

at org.elasticsearch.common.util.concurrent.AbstractRunnable.run(AbstractRunnable.java:26) ~[elasticsearch-8.15.2.jar:?]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1144) ~[?:?]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:642) ~[?:?]

at java.lang.Thread.run(Thread.java:1570) ~[?:?]

[2024-10-17T09:28:12,047][WARN ][t.b.r.b.e.MainConfigBasedReloadableEngine] [esmaster01] [1115979645-742970552] ROR is being stopped! Loading main settings skipped!

I am using ReadonlyREST ACL editor in KIbana GUI.

Could you please send me the following:

You can send them using a private message.

Hello,

If someone has similar issue try do:

It helped me and now i am above 1200 rows and can add new users or create groups.