Support request

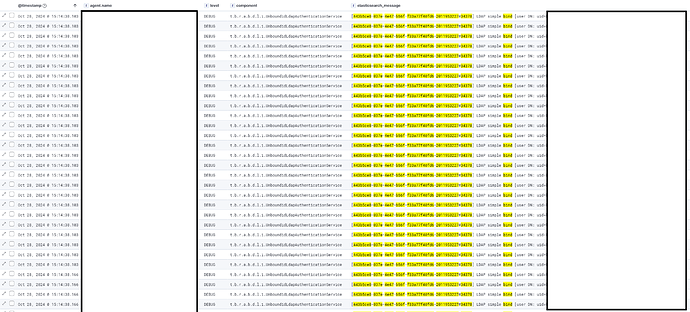

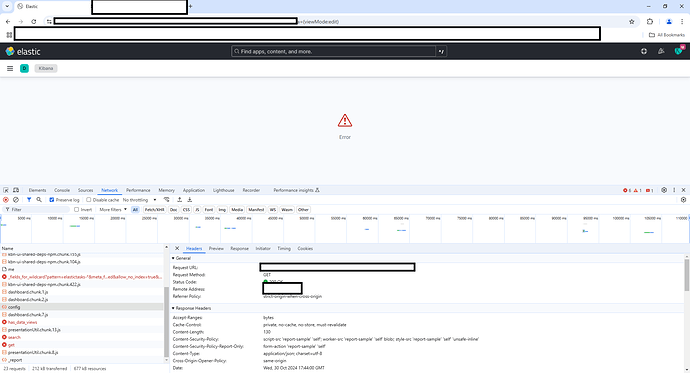

Hey, we’re using LDAP for authenticating to Kibana. We started experiencing weird behaviour after migrating our 6.x ELK to 7.x where after the LDAP password change when trying to open Kibana you get the forbidden error. Cleaning the cookies & cache solves the issue and redirects you properly to the login page. The issue persists after upgrading everything to 8.x:

Dec 01 13:23:32 laaskb002d1vteo kibana[1855]: [13:23:32:443] [error][plugins][ReadonlyREST][esClient] ES Authorization error: 403 Error: ES Authorization error: 403

Dec 01 13:23:32 laaskb002d1vteo kibana[1855]: at l.e (/usr/share/kibana/plugins/readonlyrestkbn/proxy/core/esClient.js:1:17932)

Dec 01 13:23:32 laaskb002d1vteo kibana[1855]: at l.e (/usr/share/kibana/plugins/readonlyrestkbn/proxy/core/esClient.js:1:5483)

Dec 01 13:23:32 laaskb002d1vteo kibana[1855]: at tryCatch (/usr/share/kibana/plugins/readonlyrestkbn/node_modules/regenerator-runtime/runtime.js:45:40)

Dec 01 13:23:32 laaskb002d1vteo kibana[1855]: at Generator.invoke [as _invoke] (/usr/share/kibana/plugins/readonlyrestkbn/node_modules/regenerator-runtime/runtime.js:274:22)

Dec 01 13:23:32 laaskb002d1vteo kibana[1855]: at Generator.prototype.<computed> [as next] (/usr/share/kibana/plugins/readonlyrestkbn/node_modules/regenerator-runtime/runtime.js:97:21)

Dec 01 13:23:32 laaskb002d1vteo kibana[1855]: at asyncGeneratorStep (/usr/share/kibana/plugins/readonlyrestkbn/node_modules/@babel/runtime/helpers/asyncToGenerator.js:3:24)

Dec 01 13:23:32 laaskb002d1vteo kibana[1855]: at _next (/usr/share/kibana/plugins/readonlyrestkbn/node_modules/@babel/runtime/helpers/asyncToGenerator.js:25:9)

Dec 01 13:23:32 laaskb002d1vteo kibana[1855]: at processTicksAndRejections (node:internal/process/task_queues:95:5)

Dec 01 13:23:32 laaskb002d1vteo kibana[1855]: [13:23:32:445] [info][plugins][ReadonlyREST][authorizationHeadersValidation] Could not revalidate the session against ES: + WRONG_CREDENTIALS

RoR LDAP config:

ldaps:

- name: adform

ssl_trust_all_certs: true

bind_dn: ...

bind_password: ...

search_user_base_DN: ...

search_groups_base_DN: ...

user_id_attribute: ...

unique_member_attribute: '...'

group_search_filter: (objectClass=group)

connection_pool_size: 10

connection_timeout_in_sec: 15

request_timeout_in_sec: 15

cache_ttl_in_sec: 60

servers:

- "ldaps://....com:636"

Is it expected?

ROR Version: 1.54.0

Kibana Version: 8.10.4

Elasticsearch Version: 8.10.4

{“customer_id”: “a2d8a38b-1070-4845-aa8e-6f38fb585857”, “subscription_id”: “c6f3569d-3d8e-46ce-ac53-92f19301b69e”}